Context Evals

AI quality that improves with every use

Define what good looks like for your team. Your experts correct mistakes, and Context learns. Quality gets better every day, automatically.

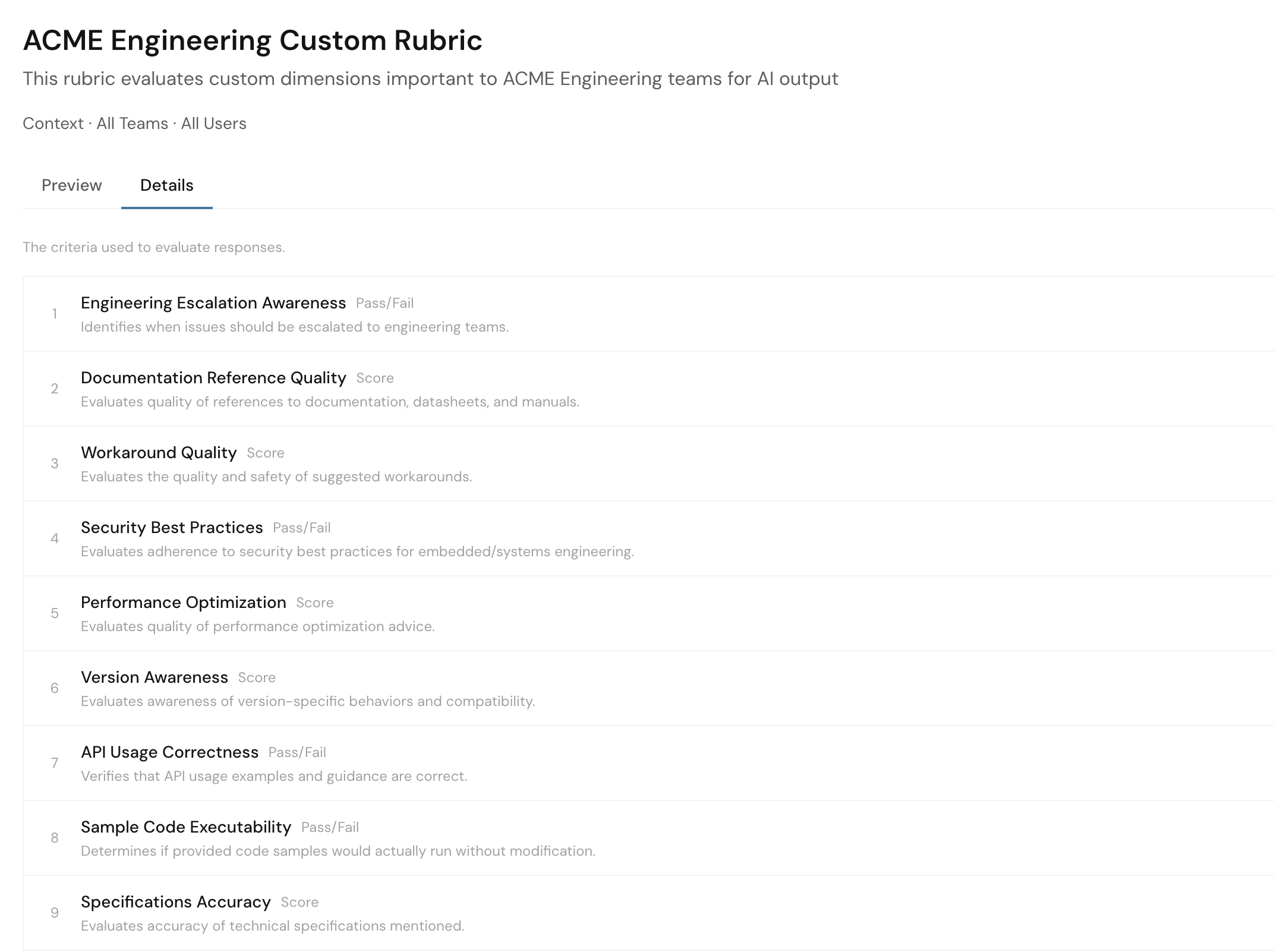

CUSTOM RUBRICS

Quality defined by your team

Define evaluation criteria with specific dimensions, scoring scales, and judge logic. Finance tracks compliance and accuracy. Legal tracks citations. Engineering tracks security. Every team defines what matters.

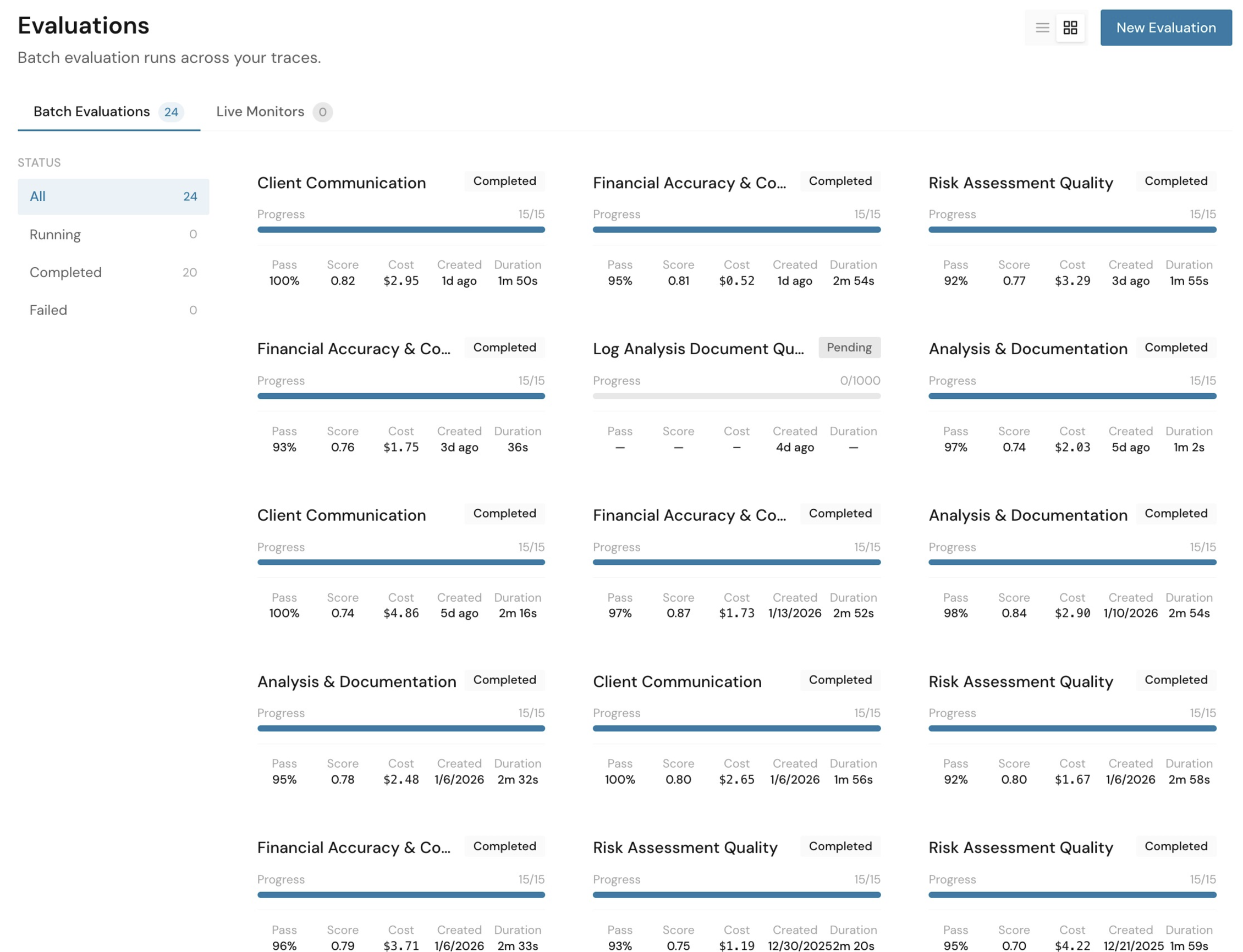

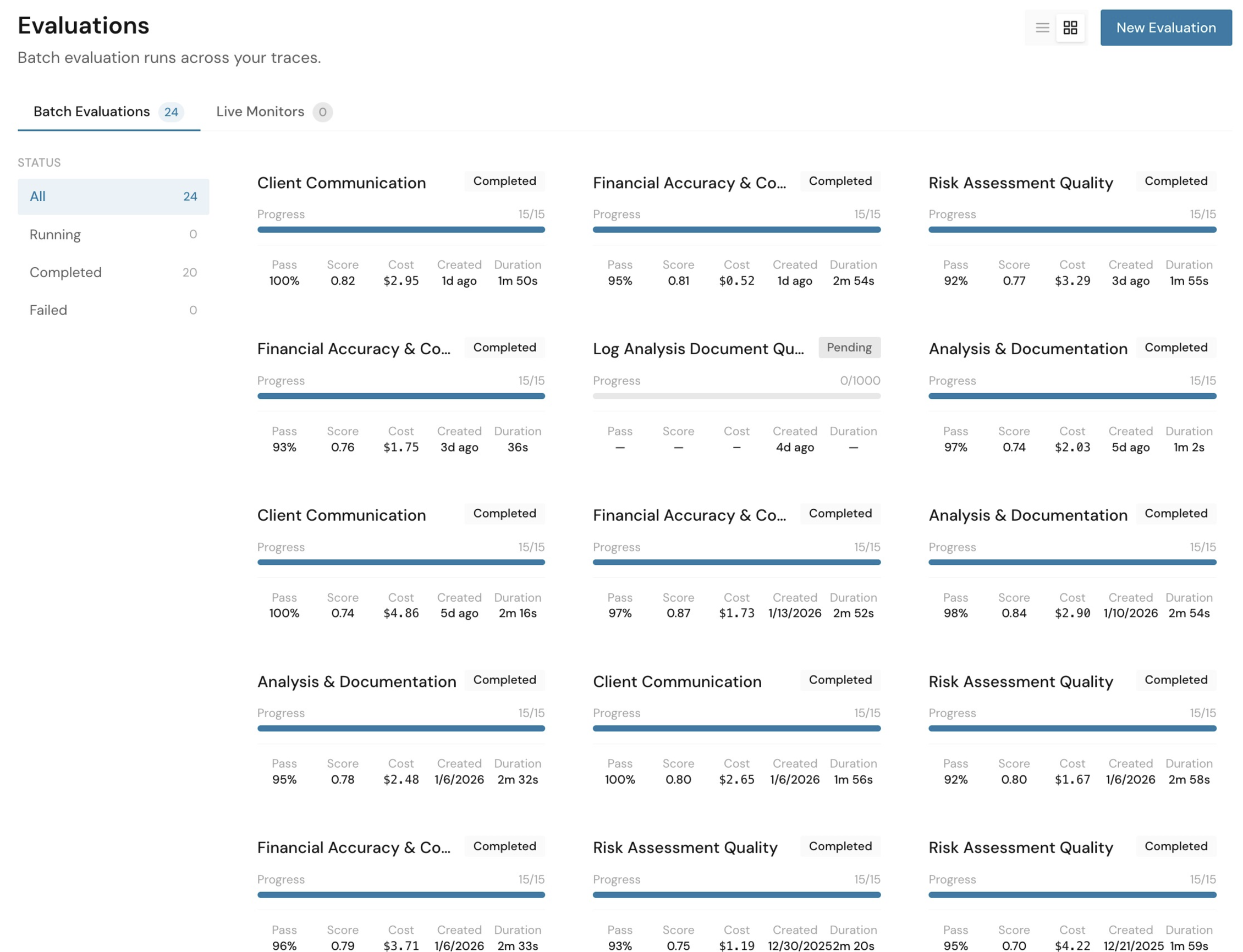

CONTINUOUS EVALUATION

Run continuous evaluations over your data

Automatically evaluate every response against your rubrics. Track quality metrics over time, catch regressions instantly, and ensure consistent performance across your entire dataset.

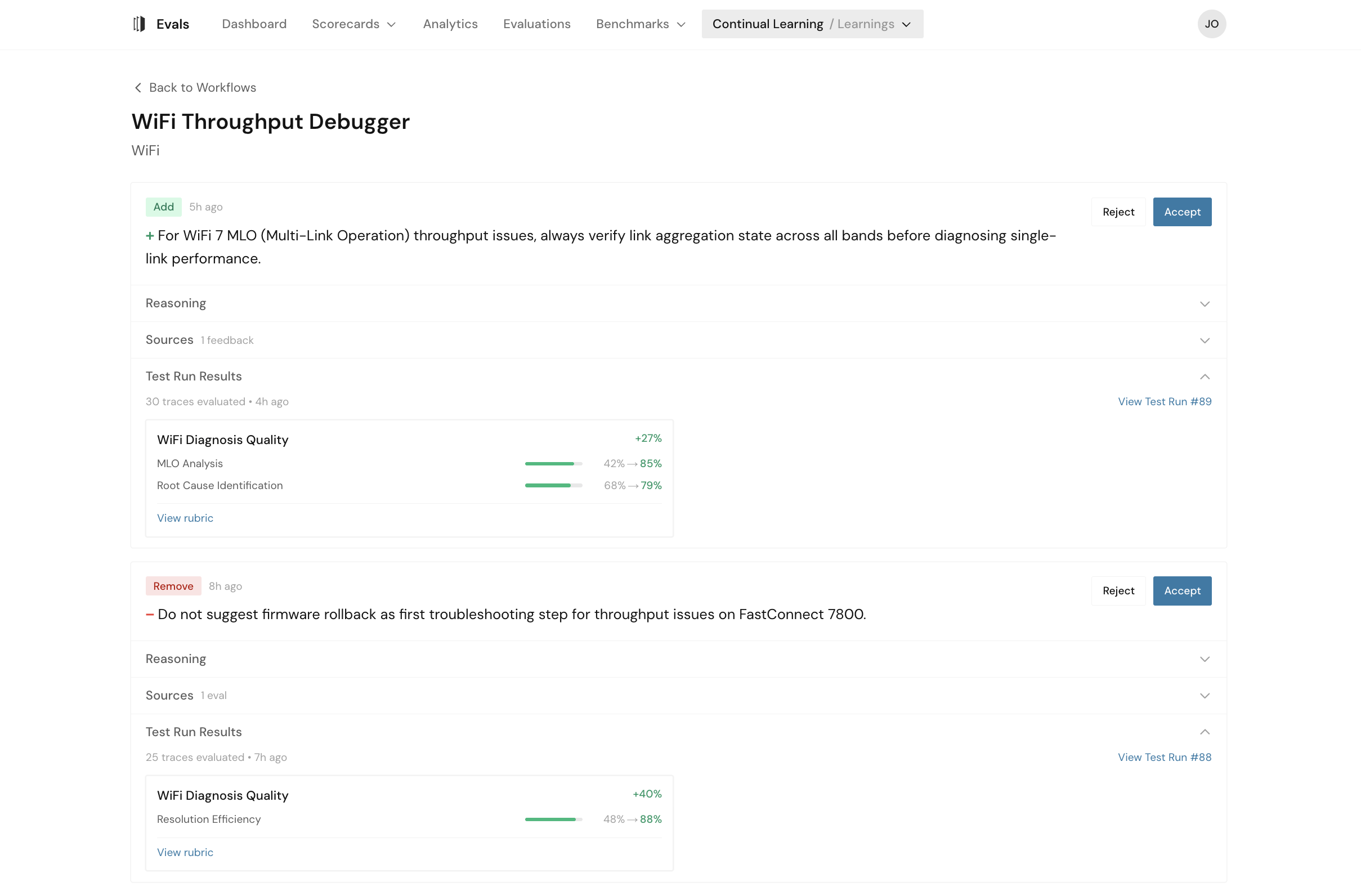

LEARNING FROM EXPERTS

Corrections become improvements

When an expert fixes a mistake, Context learns from it. No separate training process—improvements happen automatically as your team works.

CUSTOM BENCHMARKING

Custom benchmarking suite

Create test suites from real conversations. Run structured evaluations across model versions, prompt iterations, and configuration changes. Build confidence in every deployment.

MODEL DEVELOPMENT

Custom model development

Use your rubrics and expert feedback to train custom models through reinforcement learning. Your team's corrections become the training signal, creating models optimized for your specific domain and quality standards.

Deploy where you need it

Context adapts to your infrastructure and security requirements. Whether you need our managed cloud, a dedicated private instance, deployment in your VPC, or a fully on-premises solution, we have you covered.

Talk to Sales→Get started instantly with our fully managed cloud platform. We handle all infrastructure, updates, and scaling.

Get started now→